Understanding Hadoop Map Reduce step by step with examples for Multi Nodes

In the era of big data, processing massive datasets quickly and efficiently has become crucial for businesses and researchers alike. Hadoop MapReduce, a widely-used framework, offers a scalable and fault-tolerant solution by breaking down data processing into manageable tasks that can run across multiple nodes in a distributed environment. But how exactly does it work, and how does it ensure seamless processing in such large-scale systems? In this article, we’ll take a step-by-step journey through Hadoop MapReduce, breaking down each phase of its execution with practical examples spanning multiple nodes. By the end, you’ll gain a clear understanding of how this framework powers everything from search engines to data analytics.

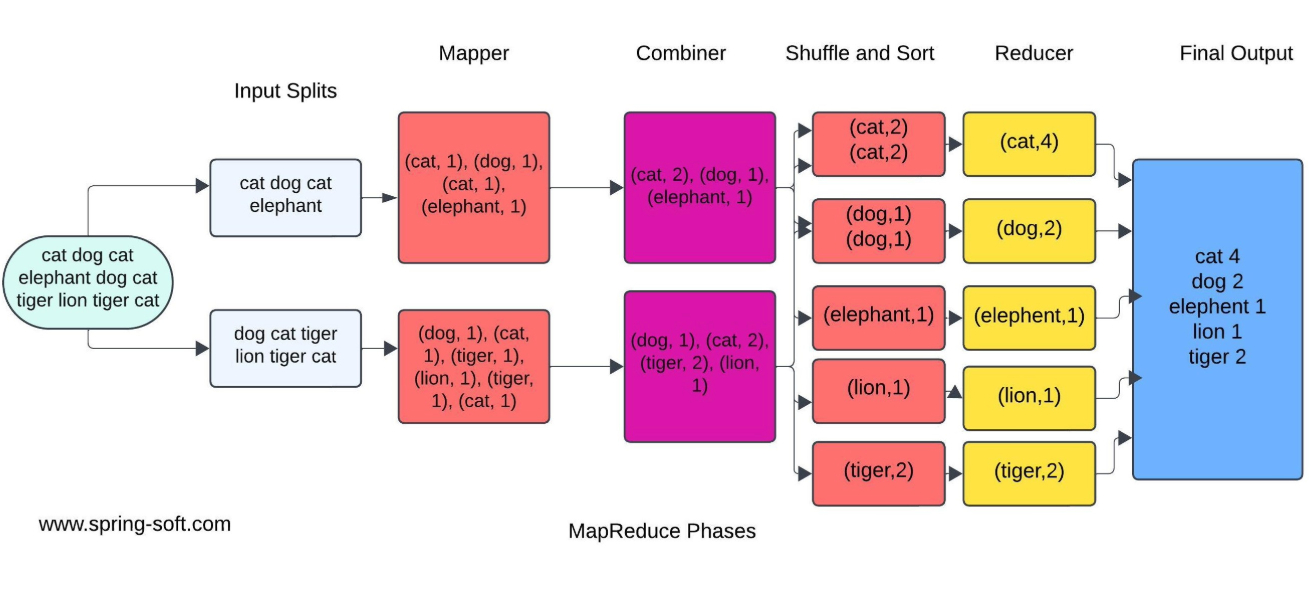

Breaking Down MapReduce: Phase by Phase

1. Map Phase

Processes input data and generates intermediate key-value pairs.

2. Combiner Phase

Acts as a mini-reducer. It processes intermediate key-value pairs to combine them locally before the shuffle and sort phase. This helps in reducing the amount of data transferred to the Reducer.

3. Partitioner Phase

Determines how the intermediate key-value pairs are distributed to the Reducer nodes. It ensures that all records with the same key end up on the same Reducer.

4. Shuffle and Sort Phase

Transfers intermediate key-value pairs from the Mapper to the Reducer nodes and sorts them by key.

5. Reduce Phase

Aggregates and processes the intermediate key-value pairs to produce the final output.

The inclusion of the Partitioner and Combiner phases enhances the efficiency of MapReduce by optimizing data transfer and reducing computation time.

Let’s Understand the Map Phase with a Classic Example:

To illustrate, we’ll count the frequency of words in a text file. For better understanding, let’s split the input data into two nodes to simulate distributed processing.

Example Input File Data

cat dog cat elephant dog cat tiger lion tiger cat

1. Segregate Data Across Two Nodes

To process this input on two nodes, we divide the input data into two splits. Assume:

Node 1 gets:

cat dog cat elephant

Node 2 gets:

dog cat tiger lion tiger cat

2. Map Phase Output

Each Mapper processes its split and outputs intermediate key-value pairs:

Node 1 Mapper Output:

(cat, 1), (dog, 1), (cat, 1), (elephant, 1)

Node 2 Mapper Output:

(dog, 1), (cat, 1), (tiger, 1), (lion, 1), (tiger, 1), (cat, 1)

3. Combiner Phase

Now, each node applies the Combiner locally to reduce the volume of data by aggregating key-value pairs.

Node 1 Combiner Output:

(cat, 2), (dog, 1), (elephant, 1)

Node 2 Combiner Output:

(dog, 1), (cat, 2), (tiger, 2), (lion, 1)

The Combiner performs a local reduce operation for each key on its respective node. At this stage, the counts are only aggregated locally within each node.

4. Shuffle and Sort Phase

Data Transfer:

The intermediate key-value pairs generated by the Mappers (or Combiners, if used) are sent to the appropriate Reducer nodes.

Partitioner:

The Partitioner ensures that all values associated with the same key are routed to the same Reducer.

For instance:

- (cat, 2) from Node 1 and (cat, 2) from Node 2 are sent to the same Reducer.

- Similarly, (dog, 1) from both nodes will end up together on another Reducer.

Grouping by Key:

During this process, the framework groups all values for the same key together. For example:

- The key

cathas [2, 2] - The key

doghas [1, 1]

Sorting:

As the name suggests, the data is also sorted by keys to facilitate efficient processing during the Reduce phase.

Input to Shuffle and Sort Phase:

This would be the output of the Combiner from the two nodes:

Node 1 Combiner Output:

(cat, 2), (dog, 1), (elephant, 1)

Node 2 Combiner Output:

(cat, 2), (dog, 1), (tiger, 2), (lion, 1)

Output of Shuffle and Sort Phase:

(cat, [2, 2]) (dog, [1, 1]) (elephant, [1]) (lion, [1]) (tiger, [2])

Each group is now ready for the Reduce phase, where the values associated with each key will be aggregated to produce the final results.

5. Reduce Phase: Aggregation of Values

The Reducer processes the output of the Shuffle and Sort Phase. It takes the grouped key-value pairs (keys and their corresponding lists of values) and aggregates them to produce the final output.

Input to Reducers:

Here is the data passed to the Reducers after the Shuffle and Sort Phase:

(cat, [2, 2])

(dog, [1, 1])

(elephant, [1])

(lion, [1])

(tiger, [2])

Reducer Operation:

The Reducer iterates over each key and its list of values, performs aggregation (in this case, summing up the values), and generates the final output.

Output from Reducer:

cat: 4

dog: 2

elephant: 1

lion: 1

tiger: 2

Final Output Explanation

The Reduce phase combines all the values for each key into a single final value. For example:

- For the key

cat, the values [2, 2] are summed to get4. - For the key

dog, the values [1, 1] are summed to get2.

This gives us the final word counts for the dataset.

Sample Code for Mapper, Combiner and Reducer Phase

Map Phase Code (Python):

def map_function(input_line):

# Split the line into words

words = input_line.strip().split()

# Emit key-value pairs (word, 1) for each word

for word in words:

yield (word, 1)

# Example input:

input_line = "cat dog cat elephant dog cat"

mapped_data = list(map_function(input_line))

print(mapped_data)

# Mapper Output: [('cat', 1), ('dog', 1), ('cat', 1), ('elephant', 1), ('dog', 1), ('cat', 1)]

Combiner Phase Code (Python):

from collections import defaultdict

def combiner_function(mapped_data):

# Dictionary to aggregate word counts

word_counts = defaultdict(int)

# Combine counts locally

for word, count in mapped_data:

word_counts[word] += count

# Emit combined results

for word, total_count in word_counts.items():

yield (word, total_count)

# Input to Combiner (output from Mapper)

mapped_data = [

('cat', 1), ('dog', 1), ('cat', 1),

('elephant', 1), ('dog', 1), ('cat', 1)

]

# Run the Combiner

combined_data = list(combiner_function(mapped_data))

print(combined_data)

# Output: [('cat', 3), ('dog', 2), ('elephant', 1)]

Reducer Phase Code(Python):

def reduce_function(key, values):

# Aggregate the values (sum them up)

total = sum(values)

return (key, total)

# Input to Reducer

input_data = {

'cat': [2, 2],

'dog': [1, 1],

'elephant': [1],

'lion': [1],

'tiger': [2]

}

# Reducer Output

final_output = [reduce_function(key, values) for key, values in input_data.items()]

print(final_output)

# Output: [('cat', 4), ('dog', 2), ('elephant', 1), ('lion', 1), ('tiger', 2)]

key outputs of each phase in a simple table

| Phase | Input | Output | Key Role |

|---|---|---|---|

| Map | Input split (e.g., “cat dog cat…”) | Key-value pairs (e.g., (cat, 1)) |

Processes input data |

| Combiner | Mapper output | Local aggregated counts (e.g., (cat, 2)) |

Reduces data size locally |

| Shuffle & Sort | Combiner output | Grouped & sorted data (e.g., (cat, [2,2])) |

Routes data to Reducer nodes |

| Reduce | Grouped data | Final output (e.g., cat: 4) |

Aggregates and finalizes results |

Real-World Applications of MapReduce

-

Search Engine Indexing

Search engines like Google use MapReduce to crawl and index web pages. The Map phase extracts information such as keywords and metadata, while the Reduce phase organizes and aggregates this data to create an efficient, searchable index. - Log Analysis

Many organizations use MapReduce to analyze server logs. For instance:- Map Phase: Parses log files to extract fields like IP addresses or response times.

- Reduce Phase: Aggregates data to identify patterns, such as the most visited web pages or peak traffic times.

- Social Media Analytics

Social media platforms process user-generated content and interactions at scale:- Map Phase: Processes user data, hashtags, likes, and shares.

- Reduce Phase: Aggregates this data to uncover trends, such as popular hashtags or user engagement metrics.

- Fraud Detection in Banking

Financial institutions use MapReduce for fraud detection by analyzing millions of transactions:- Map Phase: Identifies unusual patterns, such as multiple transactions in a short time.

- Reduce Phase: Aggregates flagged transactions to highlight suspicious activity.

- Recommendation Systems

E-commerce platforms like Amazon and streaming services like Netflix use MapReduce for personalized recommendations:- Map Phase: Processes user behavior, such as clicks, views, and purchases.

- Reduce Phase: Aggregates this data to suggest products or shows.

- Genomic Data Analysis

In bioinformatics, MapReduce processes genetic data to identify patterns:- Map Phase: Analyzes DNA sequences.

- Reduce Phase: Aggregates similar patterns to uncover genetic variations or mutations.

- Weather Data Processing

Meteorological agencies use MapReduce to analyze climate data from sensors globally:- Map Phase: Processes data from individual sensors (e.g., temperature, humidity).

- Reduce Phase: Aggregates data to generate weather models and forecasts.

- Retail Analytics

Retail companies use MapReduce to process sales and inventory data:- Map Phase: Processes sales data to extract product, location, and revenue details.

- Reduce Phase: Aggregates this data to identify best-selling items or regions with high demand.

Conclusion

Hadoop MapReduce is a powerful framework that simplifies the processing of massive datasets by leveraging distributed systems. Through its structured phases—Map, Combine, Shuffle and Sort, and Reduce—it transforms data into meaningful insights efficiently. By breaking down the process step by step and using multi-node examples, we’ve explored how MapReduce achieves its scalability and fault tolerance. Whether you’re diving into big data for the first time or looking to optimize existing workflows, understanding the inner workings of MapReduce is essential for making data-driven decisions. The future of data processing continues to evolve, but the principles of MapReduce remain foundational in handling large-scale data challenges.

Author: Mohammad J Iqbal